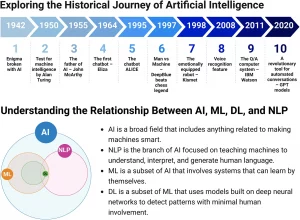

Artificial Intelligence (AI) has permeated our everyday life in ways that might have seemed like science fiction just a few decades ago. Machine learning algorithms, a subset of AI, play a vital role in many aspects of our life, from voice assistants on our phones to sophisticated recommendation systems that guide our online purchasing choices. However, as AI accelerates at a dizzying pace, a growing set of ethical issues are emerging. The two most urgent among these are the issue of bias in AI and the responsibility for decisions made by AI systems.

Bias in Artificial Intelligence

Bias in AI is a phenomenon that occurs when an algorithm produces results that are systematically prejudiced in favor of or against certain groups of individuals. This can happen when the data on which the AI is trained reflect the existing biases in society.

Let’s consider a concrete example. Suppose a company decides to use a machine learning algorithm to enhance its hiring process. The company’s historical data show that leadership positions have traditionally been filled by men. If the algorithm is trained on this data, it can “learn” that male candidates are more suited for leadership roles. As a result, it can develop a gender bias, favoring male candidates in its recommendations for future hires.

This is not a hypothetical case. In 2018, Amazon discovered that its hiring algorithm had developed a gender bias, as it had been trained on the company’s historical hiring data that were heavily skewed towards male candidates. The result of this bias had real and significant repercussions: women were systematically disadvantaged in the hiring process.

Responsibility for Automated Decisions

A second major ethical issue related to AI concerns the responsibility for decisions made by an algorithm. Who should be held accountable when an AI system makes a choice that causes harm? This is still largely uncharted territory, both ethically and legally.

A telling example involves autonomous cars. Suppose an autonomous car causes an accident resulting in material damage or even physical injury. Who is responsible in this scenario? Is it the car’s manufacturer, for not building a safe autonomous driving system? Are the developers of the autonomous driving software responsible, for not adequately foreseeing and managing the case that led to the accident? Or is it the car’s owner, for choosing to use the autonomous driving mode?

Conclusion

The rise of AI has brought with it a myriad of benefits, including increased efficiency, personalization, and convenience. However, we cannot ignore the ethical implications that arise from the increasingly widespread use of AI. Issues of bias and responsibility are particularly pressing matters that demand our attention.

In terms of potential solutions, we could consider creating clear guidelines for the responsibility of AI-made decisions, in addition to encouraging the use of bias-free training techniques. It’s also important to educate the public and AI developers about the importance of these issues and strategies to mitigate them. This might include promoting ethical practices in programming and developing AI, as well as encouraging greater diversity in the AI field.

The task before us is not just to create smarter AI, but also to create AI that is ethical, fair, and accountable. The ethical implications of AI are not an addendum or an optional add-on, but a fundamental component of its responsible development.