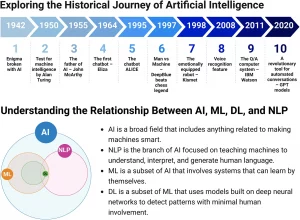

In the field of artificial intelligence (AI), generative language models (LLMs) have marked a significant breakthrough, demonstrating a remarkable ability to generate text, translate languages, write different types of creative content, and answer questions informatively. However, the evolution of AI has led to the emergence of a new class of systems: AI-based agents. While both leverage natural language understanding and generation capabilities, they differ profoundly in terms of architecture, functionalities, and applicability. This article explores the fundamental distinctions between LLMs and agents, drawing on the information provided and delving into the implications of these differences for various applications.

Limitations of Generative Language Models: A Look into Their Architecture

Generative language models, such as GPT-3, BERT, and others, are large neural networks trained on massive amounts of textual data. This training enables them to learn the intricacies of language, syntax, semantics, and general world knowledge. Upon receiving an input, the model generates an output based on the patterns and relationships it has learned during the training phase.

However, this very architecture imposes inherent limitations. Firstly, LLMs are confined to the knowledge contained within the data they were trained on. They lack the ability to explore information beyond this corpus, thus their knowledge is static and cannot be updated in real-time. Moreover, they do not have the ability to interact directly with the external world. Their interaction is limited to text exchange, meaning they cannot manipulate objects, interact with the physical environment, or access external services and data unless through a pre-defined textual interface.

Another limitation of models is their mode of reasoning. Their capacity to respond to a prompt relies on a single inference or prediction. They are incapable of performing complex reasoning processes, making decisions based on intermediate evaluations, or adapting to unforeseen situations. Furthermore, they do not natively handle the history of interactions, thus they do not have persistent memory and must treat each new prompt as an isolated entity. Finally, LLMs are not autonomous. They operate solely in response to an input and are unable to undertake proactive actions.

AI-Based Agents: An Evolution of AI Capability

AI-based agents represent an evolutionary step in the field of AI. Instead of being limited to text generation, agents aim to be intelligent entities capable of perceiving, reasoning, planning, and acting in the world. To this end, agents overcome the limitations of generative language models by integrating tools, cognitive architectures, and environmental interaction capabilities.

The most significant distinction between agents and models lies in their ability to interact with the external world. Agents can utilize a variety of tools to access real-time data, manipulate information, control external systems, and perform actions in the real world. These tools can be extensions, API functions, databases, or other external systems. Instead of being limited to text input, they can interact with data, images, audio, or any other relevant source.

Furthermore, AI-based agents are equipped with a native logic layer. This layer includes reasoning frameworks that enable them to plan, infer, and make decisions. Techniques such as Chain-of-Thought (CoT) and ReAct allow agents to break down complex problems into intermediate steps, reason about each step, and arrive at a final solution. Agents can manage session history, thus they can keep track of the context of interactions and make decisions taking previous conversations into account.

Moreover, agents are autonomous. They can be programmed with specific objectives and can act independently to achieve these objectives, even in the absence of explicit instructions. They are able to monitor the state of their environment, adapt their plans, and take initiatives to complete their tasks. Finally, AI-based agents utilize cognitive architectures that allow them to process information, make decisions, store states, and refine their actions.

Detailed Analysis of Key Differences

Let’s analyze the differences between agents and generative language models based on the mentioned parameters:

- Knowledge and Interaction with the External World:

- Models: Their knowledge is limited to the training data, and they cannot interact directly with the external world.

- Agents: Extend their knowledge by interacting with external systems through tools, accessing real-time data, and taking actions in the real world.

- Tools:

- Models: Do not have native tools.

- Agents: Natively implement tools to interact with external data and services, such as extensions, functions, and data stores.

- Logic and Reasoning:

- Models: Generate responses based on a single inference or prediction derived from the user query.

- Agents: Utilize reasoning frameworks like CoT, ReAct, or LangChain, which enable them to perform complex reasoning processes.

- Session Management:

- Models: Do not natively manage session history or ongoing context unless explicitly implemented.

- Agents: Manage session history, enabling multi-turn inferences and predictions based on user queries and previous decisions.

- Autonomy:

- Models: Are limited to providing responses based on received input.

- Agents: Are autonomous, can act independently from human intervention, and are proactive in achieving their objectives.

- Cognitive Architecture:

- Models: Do not possess a cognitive architecture for managing memory, state, reasoning, and planning.

- Agents: Utilize cognitive architectures to process information, make informed decisions, and refine subsequent actions.

Implications and Application Scenarios

The fundamental differences between agents and generative language models have significant implications for their applications.

- Generative Language Models: Are suitable for tasks involving text generation, machine translation, summarization, code generation, creative content creation, and basic customer service. They can also be used to answer factual questions, although their knowledge is limited to their training data and they cannot verify information.

- AI-Based Agents: Are capable of handling complex tasks that require interaction with the real world, reasoning, planning, and autonomy. They are suitable for tasks such as automating business processes, personal assistance, supply chain management, home automation, robotics, scientific research, and medical diagnostics.

In summary, generative language models and AI-based agents represent two distinct approaches within the field of AI, each with its own strengths and limitations. Models are focused on text generation and language analysis, while agents aim to create intelligent entities capable of acting autonomously in the real world. The choice between one or the other depends on the specific needs of the application.

Generative language models remain powerful tools for a wide range of natural language processing tasks, and their continuous developments constantly lead to improvements in quality and versatility. Agents, on the other hand, represent a more complex and advanced entity, opening a new horizon of opportunities for applications that require reasoning, autonomy, and interaction with the external world.

As AI continues to evolve, it is crucial to understand the differences between agents and generative language models in order to best leverage their capabilities and develop innovative solutions that address real-world challenges. Integrating the best features of both, and continuously exploring their potentials, will surely mark the future of artificial intelligence.