The announcement of o3, OpenAI’s new language model, has sparked a wave of reactions in the tech world and beyond. Amid sensational headlines and bold predictions, it is crucial to analyze what this model truly represents and what its implications are.

Recap: From o1 to o3

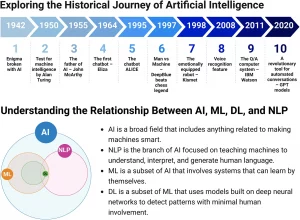

To fully grasp the significance of o3, it is helpful to briefly review its predecessors, particularly o1. Large language models (LLMs) like o1 and o3 operate based on next-token prediction. This process involves analyzing a sequence of text (the prompt) and predicting the most appropriate word to follow. The predicted word is then used to anticipate the next one, and so forth, generating coherent text.

A key innovation that enhances these models’ reasoning capabilities is Chain of Thought (CoT). Instead of merely predicting the next word, the model is encouraged to explicitly outline its reasoning step by step. This approach improves response quality by making the thought process transparent. This technique can be applied both during inference (model usage) and in training.

During training, a verifier model evaluates the correctness of generated reasoning chains. Correct chains are rewarded, while incorrect ones are penalized, allowing the model to learn the most effective reasoning pathways. This process is particularly useful for problems with objectively verifiable solutions, such as mathematics, physics, or programming challenges. Models like o1 generate multiple sequences, evaluate them, and return the best possible response.

o3: An Evolution, Not a Revolution

o3 is not a groundbreaking novelty but rather an evolution of o1, enhanced with more data, extended training time, and a similar architecture with greater computational power. It represents a scaling-up of what was already done with o1, utilizing the same Chain of Thought mechanism. The primary difference lies in the increased scale—more resources and data have been employed in training. This approach has also been adopted by other models, such as Cloud 3.5 and Gemini 2.0, albeit with varying levels of transparency.

A natural question arises: why is there no o2? The answer is purely pragmatic—”o2″ was already associated with a UK-based telecommunications company, so to avoid confusion, the name o3 was chosen.

o3’s Extraordinary Results

Although not publicly accessible, o3 has demonstrated remarkable results. These achievements were presented in OpenAI’s announcement video. Currently, only a select group of individuals can test the model due to security concerns and the high costs of running it. According to Net Mac ALC, one of OpenAI’s developers, o3 builds upon the Reinforcement Learning (RL) mechanism introduced with o1, further refining it.

o3’s goal is no longer merely to predict the next word but to predict a sequence of words leading to an objectively correct response. This “sequence of words” represents a form of reasoning, though not human-like in nature. Training on reasoning sequences allows the model to simulate a reasoning process. The key aspect is that the correctness of responses can be objectively verified, particularly in fields like mathematics and programming.

Benchmarks play a crucial role in evaluating machine learning model performance. They involve testing a model using a set of questions with known answers to assess response accuracy. These benchmarks focus on objectively measurable aspects, such as solving mathematical problems or coding tasks, rather than evaluating language quality.

Key Benchmarks of o3

Three primary benchmarks highlight o3’s capabilities:

- Frontier Math: This benchmark includes highly complex, never-before-seen math problems designed by expert mathematicians. These problems typically require days or weeks to solve. Before o3, the best models could solve only 2% of these problems, while o3 has achieved an impressive 25.2% success rate. Notably, there was no data contamination—the model had never encountered these problems before. This ability to construct novel reasoning pathways, even if based on textual data, allows these models to tackle complex problems, albeit differently from human reasoning.

- Software Engineering on Bench: This benchmark assesses coding capabilities on real-world complex problems. o3 has shown significant improvements over o1. In coding competitions, o3 ranked 175th globally, outperforming 99.9% of human participants.

- ARC Benchmark (Abstraction and Reasoning Corpus): Designed specifically to challenge language models, this benchmark presents visual problems requiring abstract reasoning and generalization. The problems are divided into a training set (with solutions) and an evaluation set (without solutions). The training examples do not allow the model to memorize solutions for the evaluation set. o3, in a high-efficiency configuration, achieved a 75% success rate, marking a significant leap in performance. However, this setup costs $20 per task, while a low-efficiency configuration costs around $360 per task.

Beyond the Numbers: The Nature of Intelligence

Despite its exceptional performance, it is crucial to avoid falling into the trap of hype and to recognize o3’s limitations. The creator of the ARC Benchmark emphasized that excelling in this benchmark does not equate to achieving Artificial General Intelligence (AGI). AGI, according to this definition, would be reached when it becomes impossible to create tasks that are easy for humans but difficult for AI. Currently, o3 still struggles with simple tasks, highlighting a fundamental difference from human intelligence.

It is important to understand that o3 is a powerful tool excelling in specific tasks, but it is not a replica of human intelligence. These systems can solve ultra-complex problems, such as Frontier Math challenges, which most humans cannot tackle. o3’s approach focuses on specific tasks, which may not necessarily be the best path toward human-like intelligence, which should naturally interact with other humans.

According to the video’s author, solving the ARC Benchmark is a necessary but insufficient condition to declare a language model as AGI. In fact, the same benchmark’s creator is already working on new versions, ARC V2 and ARC V3.

Ethical Implications and Considerations

The technological advancements of o3 raise several critical questions:

- Scalable Oversight: If these systems become more intelligent than most users, who will control them, and how can we ensure they are used responsibly?

- Reproducibility and Consistency: For serious applications, these systems’ responses must be reproducible and consistent, not random or incorrect.

- Appropriate Benchmarks: It is necessary to develop benchmarks that assess these models’ capabilities appropriately, avoiding excessive focus on specific, objectively verifiable tasks.

It is crucial to be wary of sensationalist headlines and the hype surrounding these announcements. Many articles and YouTube videos claim that o3 has achieved AGI, but this claim is false and misleading. A more measured approach is needed, where each advancement is carefully evaluated and discussed by the scientific community.

Conclusion

o3 represents a significant step forward in artificial intelligence, demonstrating reasoning and problem-solving capabilities that were previously unthinkable. However, it is essential to maintain a critical perspective, avoiding the misconception that these advancements equate to AGI. o3 is not a human mind but a powerful tool that, if used responsibly, can lead to groundbreaking discoveries and applications.

The future challenge lies in developing AI models that not only excel in specific tasks but also interact with humans naturally and comprehend the world in its full complexity. Continued investment in research and development is crucial, but so is careful attention to the ethical and societal implications of these technologies.