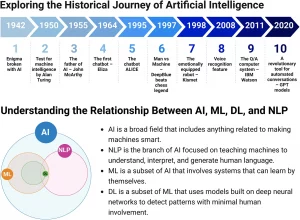

In recent years, large language models (LLMs) have made significant strides in the field of natural language understanding and generation. These models, pre-trained on vast datasets and later aligned with human preferences, produce useful and coherent results. However, despite the impressive outcomes, LLMs still face intrinsic limitations related to model size and training data. Scaling these models is costly and requires extensive retraining on trillions of tokens.

Collaboration Among LLMs: A New Frontier

With the growing number of LLMs available, leveraging the collective expertise of these models represents an exciting direction for research. Each LLM possesses unique strengths and specializations in various tasks. For instance, some models excel at following complex instructions, while others are better suited for code generation. The intriguing question is whether we can combine these skills to create a more capable and robust model.

A phenomenon known as “LLM collaborativity” highlights that an LLM tends to generate better responses when utilizing outputs from other models, even if the latter are less capable. This improvement occurs even when the auxiliary outputs are of lower quality than what a single LLM might generate autonomously.

The Mixture of Agents (MoA) Methodology

The Mixture of Agents (MoA) methodology harnesses the collective strengths of multiple LLMs to enhance reasoning and language generation capabilities. Instead of relying on a single LLM, MoA employs various LLMs iteratively to refine responses. Here’s how it works:

- Multi-tier Architecture: MoA builds an architecture where each tier comprises multiple LLM agents.

- Auxiliary Inputs: Each agent receives outputs from the agents in the previous tier as auxiliary inputs to generate its own response.

- LLM Collaboration: It leverages LLM collaborativity to improve responses.

- Agent Selection: The choice of LLMs for each tier is based on performance metrics and response diversity.

- Agent Roles: LLMs can be proposers, generating useful responses, or aggregators, synthesizing responses from other models.

- Replication and Reaggregation: MoA introduces multiple aggregators that iteratively refine responses, utilizing the strengths of various models.

Future Impact of MoA and LLM Collaboration

The study on MoA highlights the potential of this methodology to enhance the effectiveness of LLM-based virtual assistants, making AI more accessible and aligned with human reasoning. Representing intermediate results in natural language increases interpretability and facilitates better alignment with human preferences.

Optimizing the MoA architecture represents a promising area of future research, with the potential to further improve performance. However, the iterative aggregation of responses in the MoA model could result in high initial response times, impacting user experience. To mitigate this issue, MoA levels could be limited, or block aggregation could be explored.

In conclusion, the MoA approach opens new perspectives for collaboration among language models, enhancing language generation and bringing us closer to more effective and intelligent AI systems.